Key Insights to Celery

In this post we will be looking into the basic intro to Celery, its installation with Django, configurations that can be used in various scenarios, and some commonly faced issues while using Celery.

Celery

“Celery” is an asynchronous task queue/job queue based on distributed message passing. It is focused on real-time operation, but supports scheduling as well.

Where it can be used?

Celery is used at places where you want to execute some tasks in parallel without blocking your current code flow. At times you wish to perform some non-urgent long-running tasks or small concurrent tasks in parallel. It can be used to perform background services at regular intervals. For eg. creating log reports, sending emails, etc.

It is also used to create workflows and schedule them to execute at regular intervals or via API.

How celery works?

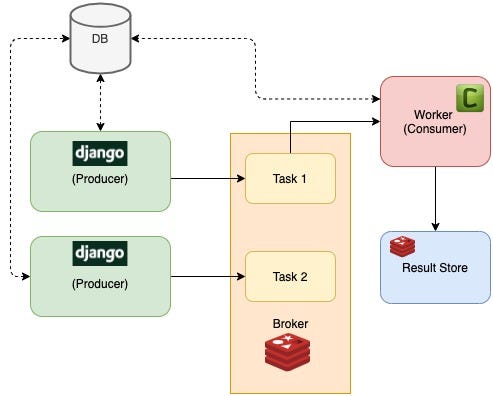

The picture on the left shows a brief illustration of how celery works in real life where our applications act as producers and workers (processes) as consumers eagerly waiting for tasks to be put in a queue so they can be consumed. For storage of tasks until consumed and to persist results of the task we use Redis, RabbitMQ, etc.

Getting Started

Installing Celery

pip install celery==3.1.18

If you are using Django your layout will be something like as follow:

- proj/

- manage.py

- proj/

- __init__.py

- settings.py

- urls.pyCreate a file celery.py in proj/proj/

Then you need to import this app in your proj/proj/__init__.py module. This ensures that the app is loaded when Django starts so that the @shared_task decorator (mentioned later) will use it:

@shared_task decorator lets you create tasks without having any concrete app instance

Example:

For detailed explanation refer here.

Configuration

Celery offers a lot of options that can be used to optimize memory usage and reduce time as per our needs. Some of the configurations are system-dependent and the kind of broker we are using for queuing.

For detailed and more insight into all options and configurations, you can refer here. I am gonna mention some scenarios where some configurations can play a vital role to achieve better performance.

Long running and high memory consuming tasks

At times we perform CPU-bound tasks on celery that have high memory requirements and low concurrency.

Some of the worker configurations that can help are as follow:

- worker_max_memory_per_child

Default: No limit. Type: int (kilobytes)

Maximum amount of resident memory, in kilobytes, that may be consumed by a worker before it will be replaced by a new worker. If a single task causes a worker to exceed this limit, the task will be completed, and the worker will be replaced afterwards.

- worker_max_tasks_per_child

Default is no limit

Maximum number of tasks a pool worker process can execute before it’s replaced with a new one. At times having multiple open connections to database cause memory leaks restarting or replacing worker after a certain number of tasks avoids that.

- worker_prefetch_multiplier

Default is 4 (four messages for each process)

The number of messages to prefetch at a time multiplied by the number of concurrent processes. . The default setting is usually a good choice, however if you have very long-running tasks waiting in the queue and you have to start the workers, note that the first worker to start will receive four times the number of messages initially. Thus the tasks may not be fairly distributed to the workers. At times you might wanna limit this number to avoid fetching these tasks into memory to reduce memory consumption. You can read more about them here.

High and Low Priority Tasks

RabbitMQ supports priorities since version 3.5.0, and the Redis transport emulates priority support.

However it is preferred to route High priority tasks to a different worker and low priority tasks to a different worker.

You can read about handling priority tasks here.

Most of the time you may want to have a fair distribution of tasks across workers.

By default, preforking Celery workers distribute tasks to their worker processes as soon as they are received, regardless of whether the process is currently busy with other tasks. The -Ofair option disables this behavior, waiting to distribute tasks until each worker process is available for work.

Scaling Processes as the number of tasks increases

The autoscaler component is used to dynamically resize the pool based on load. You can specify minimum and maximum number of processes.

It’s enabled by the --autoscale option.

Example:

--autoscale=10,3 (always keep 3 processes, but grow to 10 if necessary).Maintaining Multiple Queues

There are many ways we can configure queues and picking up tasks from a queue. We can define them in broker_transport_options.

Example:

app.conf.broker_transport_options = {

'queue_order_strategy': 'round_robin',

}This will ensure tasks from multiple queues are fetched in round-robin manner. Other options are FCFS, sorted, priority.

Handling IO bound tasks with High Concurrency

For handling small and many concurrent tasks you might wanna have a look at eventlet and gevent.

Celery supports four execution pool implementations: prefork (default), solo, eventlet and gevent. Eventlet and Gevent are two thread-based alternative execution to pool implementation. Both eventlet and gevent use greenlets and not threads.

Why Eventlet and Gevent?

If you run a single process execution pool, you can only handle one request at a time. It takes a long time to complete those thousands of GET requests. So you spawn more processes. But there is a tipping point where adding more processes to the execution pool hurts performance. The overhead of managing the process pool becomes more expensive than the marginal gain for an additional process.

In this scenario, spawning hundreds (or even thousands) of threads is a much more efficient.

Greenlets emulate multi-threaded environments without relying on any native operating system capabilities. Greenlets are managed in application space and not in kernel space. There is no scheduler pre-emptively switching between your threads at any given moment. Instead your greenlets voluntarily or explicitly give up control to one another at specified points in your code.

This makes greenlets excel at at running a huge number of non-blocking tasks. Your application can schedule things much more efficiently. For a large number of tasks this can be a lot more scalable than letting the operating system interrupt and awaken threads arbitrarily.

The benefit of using a gevent or eventlet pool is that Celery worker can do more work than it could before.

Common Issues

1. Not using Database transactions in tasks

At times we forget to use transaction.atomic() which leads to data integrity issues. You could even add a project-specific wrapper for Celery’s @shared_task that adds @atomic to your tasks.

2. High CPU & RAM Usage

At times --max-memory-per-child is set too low which leads to killing and restarting of workers as soon as they reach their max-memory limits. You might consider using --max-tasks-per-child

Another issue is using very high concurrency for CPU intensive tasks, concurrency should usually be set equal to or in multiple of number of cores/processors available.

3. Worker not consuming tasks

This is quite a common issue faced while working with celery is usually one of task is stuck on worker which leads to starvation of other tasks.

The following command can be used to check active tasks.

celery -A project inspect activeThis can be avoided by setting task timeouts (time_limit)

4. Using retries

Auto retry gives the ability to retry tasks with the same when a specific exception occurs. It’s always a good idea to set max_retries to prevent infinite loops from occurring.

Dealing with Issues

There can be many other unexpected issues that may arise while working with Celery key to avoid and resolve those issues is proper logging and monitoring.

Commonly used monitoring tool is Flower. Celery also provides various command line management utilities for debugging and monitoring.

Conclusion

I tried to cover some of the most common scenarios faced by engineers in their day-to-day application development. Celery is extremely powerful and is used widely for various purposes.

I hope you enjoyed the read and the information here helps you build better Celery-enabled applications. Feel free to comment and share your feedback below.